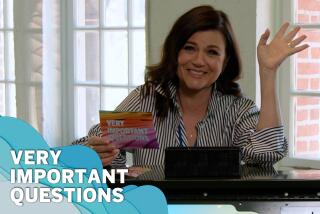

Q&A: Cathy O’Neil, author of ‘Weapons of Math Destruction,’ on the dark side of big data

Cathy O’Neil calls herself a data skeptic. A former hedge fund analyst with a PhD in mathematics from Harvard University, the Occupy Wall Street activist left finance after witnessing the damage wrought by faulty math in the wake of the housing crash.

In her latest book, “Weapons of Math Destruction,” O’Neil warns that the statistical models hailed by big data evangelists as the solution to today’s societal problems, like which teachers to fire or which criminals to give longer prison terms, can codify biases and exacerbate inequalities. “Models are opinions embedded in mathematics,” she writes.

Although algorithms are everywhere, the most dangerous ones, according to O’Neil, have three characteristics: scale, secrecy and the capacity to do harm.

Recently reached by phone, O’Neil spoke about the prevalence of these “weapons of math destruction” across different industries. The conversation has been edited for length and clarity.

When did you first realize that big data could be used to perpetuate inequality?

I found out that the work I was doing on tailored advertising was a mechanism for for-profit colleges to find vulnerable, single black mothers. Find their pain points and promise them a better life if they signed up for online courses, which in the meantime loaded them up with debt and gave them a useless education. I was like, “That’s not helping anyone; that’s making their struggles worse, and it’s happening on my watch because I am the one building the technology for this to work very efficiently.”

What is a new example of a weapon of math destruction?

Recently, I was convinced by Mona Chalabi, who is a journalist at the Guardian but who also spent time at FiveThirtyEight, that political polls are actually weapons of math destruction. They’re very influential; people spend enormous amounts of time on them. They’re relatively opaque. But most importantly, they’re destructive in various ways. In particular, they actually affect people’s voting patterns. The day before the election, if people think their candidate is definitely going to win, then why bother voting? Polls can change people’s actual behavior, which disrupts democracy in a direct way.

People are trying to analyze how demographics shaped the election results. The answer is that we’re probably not going to know or have enough information to make an educated guess until much later.

That’s right. Also, there really were new things about this election cycle that we did not have data on, so we couldn’t account for them. But I’m not suggesting that all we need to do is correct the polls and next time they’ll be more accurate and therefore better. I’m actually trying to make the argument that we should just not do them. I honestly feel like if we had a thought experiment where nobody did polls and nobody talked about polls and we all just talked about the actual issues of the campaign, then we’d have a much better democracy.

In your book, you describe some relatively well-known examples of potentially harmful algorithms, such as value-added models that grade public schoolteachers based on student test scores. You tried to get the source code behind that model from the Department of Education in New York City, but you weren’t able to. Their defense was probably that if people knew how the scores were calculated, then teachers would be able to game the system to get higher scores.

Well, the very teachers whose jobs are on the line don’t understand how they’re being evaluated. I think that’s a question of justice. Everyone should have the right to know how they’re being evaluated at their job. And I should have the right to understand those models as well because I’m a taxpayer, and the job is a government position. The Freedom of Information Act should apply.

Also, if you use the word “gaming,” first you’re implying that there’s a bad actor involved, which sometimes there is. Second, you can really only game a model if it’s weak. The weakness of the teacher value-added model is that it’s statistically terrible. Anybody whose job is on the line deserves to understand that weakness. And deserves to, for that matter, take advantage of it if they can. But my goal isn’t for a bunch of teachers to sneakily get better scores. My goal is for the model itself to be held to high standards.

In some cases, the policymakers themselves probably don’t even know how the scores are calculated.

In the case that I wrote about in my book, nobody in New York City had access to that formula. Nobody. The Department of Education did not know how to explain the scores that they were giving out to teachers.

Los Angeles’s Department of Children and Family Services has been exploring a risk-modeling algorithm called AURA. It was developed by SAS, a private contractor, and it scores children according to their risk of being abused so that social workers can better target their efforts. Something like this could be a weapon of math destruction — it has scale, and the formula is secret — or it could be benign.

Or even positive. It really depends on what exactly they’re doing with those scores. It also depends on how those scores are created. Even if they’re being somewhat punitive, if they’re doing it in a way that has been discussed as morally fair, then that’s probably still OK. If they’re finding kids at risk of child abuse and they’re removing them from families when they have just cause, then we should think of that as a good thing. What would not be OK is if the score was elevated simply because somebody happened to be black or happened to be poor.

So you’re less worried about models that target people in order to help.

It’s tricky because there are different stakeholders. People who are advocating on behalf of the children might be perfectly OK with using questionable attributes that are proxies for race and class that also are proxies for other things that actually put these kids at higher risk. It’s not a clear-cut case, even when there is a punitive result. You have to weigh the possibility of letting a child get abused when you could have prevented it against the possibility of punishing a parent who wasn’t going to abuse their child. That decision could be implemented by the data scientist, but it should not be up to the data scientist to decide the answer to that question.

Should these issues be discussed before the algorithms are deployed?

I want to separate the moral conversations from the implementation of the data model that formalizes those decisions. I want to see algorithms as formal versions of conversations that have already taken place.

In addition to such conversations, you call for the auditing of algorithms, after they have been in use, to see whether they are, in fact, fair. Have you seen this happening in practice?

I just started a business called ORCAA [O’Neil Risk Consulting and Algorithmic Auditing]. My goal is to do the auditing of the algorithms. I would love to help the people who want to use AURA, for example.

Does anything like that exist already?

There have been a few algorithms audited. Notably, ProPublica did an audit of COMPAS, a recidivism risk algorithm. It’s not a full-blown audit. It didn’t go as far as I would have liked in understanding the different stakeholders. Just like there are advocates for the children versus the advocates for the parents in the case of AURA, with COMPAS, there are people — the police — who care about getting the bad guys, and there are people who care about making sure that black men don’t go to prison longer just because they’re black — the civil rights activists. The civil rights activists and the police need to have a conversation where they weigh the chances of letting a man go free who’s going to commit a further crime versus the chance of putting a man in jail for something that he didn’t do and will not do. That same kind of balanced conversation has to take place.

Also, ProPublica is a news organization. You would want to work for the policymakers themselves.

Yes, and I would want to sign an NDA. I wouldn’t write it up. If you’re trying to use an algorithm, I’m going to help you make sure it’s fair. Alternatively, if there’s a class of people who think that they’re unfairly judged by an algorithm and they want me to help them prove that, then I could do that, too.

Have you gotten a lot of demand for these services?

I have zero clients. A bunch of people are thinking about it. Big data is a new field, and people are essentially blindly trusting it. Also, people are still living in the era of plausible deniability. They don’t want to know that their data is racist or whatever, and so far, they’ve been getting away with not knowing. What would make them want to know? Only if there are legal reasons for them to want to know, or reputational risk reasons for them to want to know, or if there’s simply an overwhelming demand by the public.

Have you seen any creative ways in which algorithms are adopted by the people rather than by the powerful?

I’ve seen some small examples. I wrote a post a couple of months ago on [my blog] mathbabe about a college that was using big data to help find kids who would need advising.

What are some examples and characteristics of the opposite of a weapon of math destruction?

My theory is that if it’s scaled and it’s secret, then it had better be obviously not destructive. Or, if it’s scaled and there’s potential for destruction, then you have to make it transparent. If there’s potential, then we need to know more. AURA fits this perfectly. It is potentially destructive, so we need to know that it is not interfering with families that are vulnerable unfairly.

It’s not obvious. Somebody really needs to worry about those kids. I speak as a child who was abused, and I would have loved for there to have been [data-driven] interventions when I was a child. We didn’t even think about that stuff back then.

One defense of algorithms is that they are less biased than humans.

Some people just assume that. They don’t check it.

Is it hard to tell if the algorithm is better?

It’s impossible to guess; you have to actually look at the data. That’s the kind of auditing I want to do. I don’t want to just audit a specific algorithm by itself, I want to audit the algorithm in the context of where it’s being used. And compare it to that same context without the algorithm. Like a meta-audit. Is the criminal justice system better off with recidivism-risk algorithms or without recidivism-risk algorithms? That’s an important question that we don’t know the answer to. We also have the question of whether a specific recidivism-risk algorithm is itself racist. You could find that a specific algorithm is racist, but it’s still better than the status quo.

You’ve worked in academia, finance, advertising, tech, activism and journalism. What advice would you give to socially conscious data scientists in each of these fields?

That’s a hard question. First of all, I’d want them to all take ethics seriously. But it is a challenge. Just taking a job as a data scientist means you’re probably working for a company for whom success means profit. So you might find yourself in the same kind of situation I found myself in, where I wasn’t directly working for a for-profit college, but I was working in an industry that helped those kinds of places survive and flourish.

Maybe data science courses should also teach ethics.

That’s definitely something I’m calling for. Every computer science major and any kind of data science program should have ethics, absolutely.

More to Read

Sign up for our Book Club newsletter

Get the latest news, events and more from the Los Angeles Times Book Club, and help us get L.A. reading and talking.

You may occasionally receive promotional content from the Los Angeles Times.