Op-Ed: How Facebook exploited us all

It’s even worse than I feared.

I left Facebook in 2013, less for my own sake than for what my presence on the service was doing to others. I knew that anyone who “liked” my page could have their data harvested in ways they wouldn’t necessarily approve.

Over the past five years, people have not only become aware of this devil’s bargain but accepted it as the internet’s price of admission.“So what if they have my data,” I saw a graduate student ask her professor this week. “Why is my privacy so important?”

Bully for you if you don’t care what Facebook’s algorithms know about your sex life or health history, but that’s not the real threat. Neither Facebook nor the marketers buying your data particularly care about what you do with your clothes off, whom you’re cheating with or any other sordid details you may find embarrassing.

That’s the great fiction of social media: That you matter as a person. You don’t.

The platform cares only about your metadata, from which they can construct a psychological profile and then manipulate your behavior. They have been using and selling even the stuff you thought you were sharing confidentially with your friends in order to identify your neuroses and neurotic vulnerabilities and leverage them against you.

That’s the great fiction of social media: That you matter as a person. You don’t.

That’s what Facebook markets to its customers. The company has been doing it ever since its investors realized that, as owners of a mere social network, they would become only multi-millionaires; to become billionaires, they’d have to offer something more than our attention to ads. So they sold access to our brain stem.

With 2.2 billion active users, Facebook knew it had a big-data gold mine. While we’ve been busily shielding what we think of as our “personal” data, Facebook has been analyzing the stuff we think doesn’t matter: our clicks, likes and posts, as well as the frequency with which we make them. Looking at this metadata, Facebook, its psychologists and its clients put us into different psychographic “buckets.”

That’s how they came to be able to predict, with about 80% accuracy, our future behaviors, including whether we’re going to go on a diet, vote for a particular candidate or announce a change in sexual orientation. From there, the challenge is to compel the lagging 20% to fall in line — to get all the people who should be going on a diet or voting for a particular candidate to conform to what the algorithms have predicted.

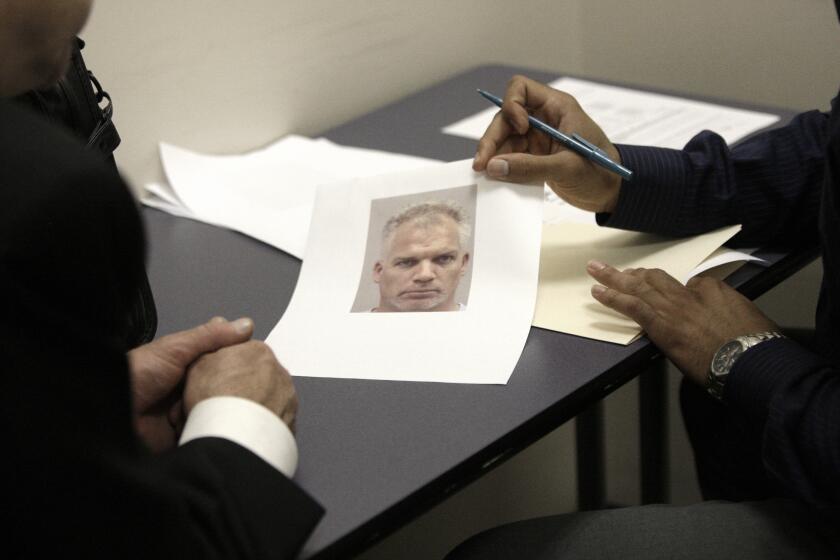

That’s where companies like Cambridge Analytica come in. They paid thousands of people to take psychology tests and to surrender their own and their friends’ Facebook data. Then they compared all this data to infer how each of us would have answered that psychology test. Armed with our real or algorithmically determined psychological profiles, Cambridge Analytica surmised our individual neurotic makeups. And they figured out how to terrify each and every one of us.

That’s the greater collateral damage of social media. It’s not simply that they can get us to buy a particular product or vote for one candidate or another. It’s that their techniques bypass our higher brain functions. They use imagery and language specifically designed to evade our logic and empathy and appeal straight to our reptilian survival instincts.

These more primitive brain regions respond only to primitive stimulus: fear, hate and tribalism. It’s the part of us that gets activated when we see a car crash or a horror movie. That’s the state of mind these platforms want us to be in, because that’s when we are most easily manipulated.

Yes, we’ve been manipulated by ads for a century now. But TV and other forms of advertising generally happened in public. We all saw the same commercials, and they often cost so much that companies knew they had to get them right. Television networks would themselves censor ads that they felt would alienate their viewers or make fraudulent claims. It was manipulative, but for the most part, consumer advertising was aspirational.

Facebook figures out who or what each of us fears most, and then sells that information to the creators of false memes and the like, who deliver those fears directly to our news feeds. This, in turn, makes the world a more fearful, hostile and dangerous place.

To ask why one should care is a luxury of privilege. Data harvesting arguably matters most when it’s used against the economically disadvantaged. It’s not just in China that social media data are used to evaluate credit worthiness and immigration status. By normalizing the harvesting of data, those of us with little to fear imperil the most vulnerable.

When Mark Zuckerberg started Facebook, a friend of his expressed surprise that people were surrendering so much personal data to the platform. “I don’t know why,” Zuckerberg said. “They trust me. Dumb ...”

We may have been dumb to trust Facebook with our data in the first place. Now we know they’ve been using the data to make us even dumber.

Douglas Rushkoff is the author of 15 books, most recently “Throwing Rocks at the Google Bus.”

Follow the Opinion section on Twitter @latimesopinionand Facebook

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.