New robot learns from plain speech, not computer code [Video]

In a small kitchen, an amateur cook roots around in the cupboard for a package of ramen. He fills a pot with water, tosses in the noodles, and sets them on the stove to cook. But this chef won’t enjoy the fruits of his labor. That’s because he’s a robot who is learning to translate simple spoken instructions into the complex choreography needed to make a meal.

This robot, developed in a lab at Cornell University in Ithaca, N.Y., was told to “fill the pot with ramen and water and cook it.”

It sounds simple, but turning that command into dinner is a lot to ask of a robotic brain: It must understand the words. It must know what a pot is and how to use it. It must know that packages of ramen are stored in a cabinet and that water pours from a faucet. Most important, it needs to know what cooking is and how to do it.

Ashutosh Saxena, head of Cornell’s Robot Learning Lab, has been training this particular robot for years, slowly adding components that allow the machine to interact intelligently with humans and the environment around it. On July 14, he and his colleagues will show off their progress at a robotics conference at UC Berkeley.

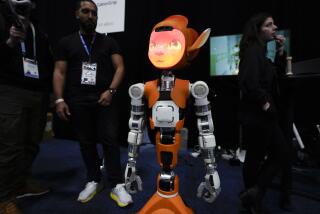

Saxena’s robot is a friendly looking cyborg with a camera mounted in its pizza-box head that allows it to identify objects like a cup and a sink. It has humorously exaggerated biceps and crab-claw hands with which it manipulates objects using actions such as grasp and carry.

But the robot’s most impressive feature is its growing ability to understand plain, old-fashioned English.

It’s not as simple as it sounds. “People may say, ‘Go to the cabinet and pick up ramen,’” Saxena said. “But you did not say, ‘Open the door.’” The robot must infer this step on its own to complete the task.

It must also tackle the problem of interpreting ambiguous language. Humans often speak in ways that must be maddeningly imprecise to a machine. Consider the following video, in which a graduate student asks the robot to make a serving of Italian affogato, a dessert beverage.

These instructions should have led to a cyber-meltdown. “Take some coffee in a cup” — what does take mean? — “add ice cream of your choice” — which ice cream — “finally, add raspberry syrup to the mixture” — what is a mixture in this context?

How did the robot avert a crisis and succeed?

Saxena and his team programmed the robot to do the task in question using a series of very specific commands such as “find,” “grasp” and “scoop.” They also programmed the robot to know that these commands related to specific objects such as bowl, spoon and ice cream. This taught the robot how the words in the instructions translated into its environment.

They also had the robot complete the task several different ways. For instance, it learned that both a stove and a microwave could be used to heat liquids.

Then the scientists rearranged the kitchen into an entirely new configuration and asked the robot to do the task again, but this time with a new set of instructions that differed in order, clarity, and completeness. And then they sat back and watched.

Two-thirds of the time, the robot successfully completed the task by drawing on its knowledge and matching the new commands with previous demonstrations as closely as possible. The more training it had, the better it did, and its practice in doing one task — making affogato — improved its performance on another — making ramen.

“That’s how people do it,” Saxena said. We learn by experience.

Traditionally, robots navigated the world by accessing a vast library of scripts that told them what to do in a specific scenario. But that has started to change in recent years, said Stefanie Tellex, a computer scientist at Brown University who was not involved in the study.

“A lot of previous work also takes this learning approach,” she said. However, what’s different about Saxena’s robot is the broad range of commands it can understand and the diverse tasks it can do.

“It’s a really exciting time for this area,” Tellex said. “Ashutosh is really uniquely positioned to carry out the next step. It’s really about bridging the gap between language, perception, action and the environment.”

For robots to take on the many tasks that await them, Saxena thinks they must be able to learn the way people do. Put simply, he said, “There is a limit to how much you can program.”

Saxena’s research group has now started a crowd-sourcing site to facilitate this. It’s called Tell Me Dave, where Dave refers to any human user who stops by. (Dipendra Misra, the doctoral student who came up with the name, denied that it’s a reference to the astronaut David Bowman in “2001: A Space Odyssey.”)

Volunteers can go to the site and play a videogame that shows the robot how to accomplish a task presented in plain English, which people find easy to understand. By clicking around a virtual environment, the user shows the robot all the steps that were missing in the instructions, and the order in which she would do them.

So far, volunteers have tried about 700 scenarios. Most of these take place in the kitchen, but Saxena is developing a virtual living room that the robot can set up for a party. More will follow after that. One day, Saxena hopes to reach 1 million simulations in a wide range of settings.

“What we need is a lot of data on how people do things and say things,” Saxena said. The robot gets “smarter every time someone plays our game.”

For all things science, follow me @ScienceJulia