‘Associative’ Memory Technology : The Brain May Yet Meet Its Match in a Computer

- Share via

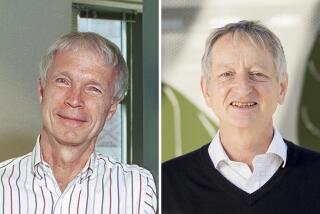

John Hopfield wants to make computers that are more like the human brain and less like electronic calculators, and if he succeeds, it could revolutionize information processing.

By redesigning the blueprints of computers to mimic the architecture of the brain, the Caltech biophysicist hopes to produce computers that will perform tasks thousands, perhaps even millions, of times faster than the best existing machines and be able to solve problems that conventional computers can now solve only with great difficulty.

The new computers would be particularly useful for such problems as pattern recognition--in which, for example, a computerized sentry would identify faces of individuals permitted access to a building or in which industrial robots could be made to see and discriminate among parts.

The new computers would also be compact, energy efficient and far more resistant than conventional computers to damage or wear. They might thus be the basis for artificial intelligences that could direct unmanned space probes for many years without human supervision.

Knowledge gained in designing the computers might also lead to a better understanding of how the brain works, particularly how memories are stored and recalled--and perhaps yield insight into ways to improve human memory.

Hopfield is not the only scientist with such visions. Spurred by Hopfield’s initial successes, many other scientists have begun to explore “associative” computer memories of the type conceived by him. Hopfield refers to such work as “making electronic components in the spirit of biology.”

Their initial results have the potential to revolutionize information processing. “It’s really a new kind of computing,” according to Alan Lapedes of the Los Alamos Scientific Laboratory.

Electronic and human brains are “two fundamentally different types of computers,” Hopfield said in a recent interview. “One is ideal for very precise computations, while the other is better suited for more ‘mushy’ types of problems, such as getting an overall impression from a visual scene or producing a complete picture from incomplete information.”

Ur brane, 4 xmpl, wil probly hav litul trubl undrstndng ths sntns. A conventional computer, however, would probably interpret it as gibberish.

Difference in Processing

On the other hand, your brain will have a very hard time calculating the square root of 203,472, while a computer can do it in a flash. This difference reflects differences in the way information is stored and processed.

In a conventional computer, information is stored at specific sites in the form of “gates” or switches that are either on or off. This information is called up only in response to specific commands.

Confronted with the phrase “Ur brane,” for example, the computer would search its memory for that specific sequence of characters and--most likely--not finding them, would be unable to act on the information.

In the human brain, in contrast, memories are not restricted to specific sites and the process of calling up memories is more generalized. The human brain would compare “Ur brane” to stored memories of words and conclude that the phrase means “Your brain.”

Many Associations

The human brain, furthermore, typically produces many associated memories for any given input. Mention of a woman’s name, for example, may also call up her face, her voice or the dinner shared with her two weeks ago.

It is this type of associative memory that Hopfield and others are trying to reproduce.

“The big difference between the brain and computers is in the hardware,” Hopfield said. In a computer, each transistor is typically connected to two or three others, and information flows in one direction only.

When one transistor is turned on, for example, it may cause a second to be turned on, the second will turn on a third, and so on. The fact that the second--or the third, or the sixteenth--has been turned on, however, has no effect on the first transistor.

In the brain, in contrast, each nerve cell or neuron is connected to thousands of others and information flows both ways. When one neuron turns on a second, it receives back a signal from the second that may or may not change it.

Five years ago, Hopfield began developing a mathematical description of how an associative memory could be constructed from conventional electronic components and how it would work. He found that the properties of such systems could be predicted and that they could be built. Associative memories can also be simulated on a conventional computer, but that simulation requires a large memory capacity.

Resistors Used

In practice, an associative memory is constructed by connecting each transistor in a circuit to every other transistor. Unlike conventional computers, in which the connections between transistors are made with simple wires, the connections in an associative memory are made with resistors.

In operation, the basic “cell” of the associative memory functions like an amplifier or a resistor, either increasing or damping the current that passes through it. The cell can thus register many different values, rather than just the on or off registered by the cell in a conventional computer.

“Most biologists think memories in the human brain are represented by the strength of the connections between individual neurons,” Hopfield said. “In the same way, information in an associative memory is represented by the strength of the resistors.”

Each “memory” or piece of information, furthermore, is spread throughout all the resistors in the circuit, and each circuit can store a large number of memories simultaneously.

The associative memory circuit will remember a memory by producing a certain output voltage when it is fed a voltage pattern representing part of the stored pattern. In effect, it might be stimulated with a voltage pattern “r bra” and recall the complete memory “Your brain.”

Deals With Misinformation

The memory circuit can also deal with misinformation. When Hopfield fed the circuit a voltage representing “mr bran,” for example, the circuit corrected the errors and again recalled the memory “Your brain.” “It is excruciatingly difficult to program digital computers to do this,” he said.

The associative memory shares certain other characteristics with the human brain. When Hopfield fed it an ambiguous sequence of voltages that could hint at either of two complete memories, the computer would normally choose the larger of the two memories--a behavior very much like the brain’s tendency to more readily remember important information.

Perhaps more intriguing is Hopfield’s discovery of a sort of “electronic inspiration.” The associative memory occasionally constructs new memories, which are actually combinations of the original stored memories.

These new memories are spurious in the present system, but their appearance suggests, Hopfield said, that the new systems might eventually be taught to correlate information and produce conjectures.

Besides storing information, the associative memories can also process it. For certain types of problems, the neural network computer is much faster than digital computers because all of the circuit elements are participating in the computation and calculations are proceeding simultaneously rather than sequentially as in conventional computers.

Making Optimum Choice

In particular, the neural network computers are better at making the optimum choice from a number of complex alternatives.

Hopfield and David Tank of the AT&T; Bell Laboratories in Holmdale, N.J., have used a simulated neural network to solve the classic “traveling salesman” problem, which involves finding the most efficient route for a salesman to travel among a set of cities on his route.

“This problem is exceptionally difficult for digital computers to solve because of the number of possibilities that must be considered,” Hopfield said. “In real life, however, it is not necessary to find the absolute best answer, only a very good one.”

In the case of a system with 10 cities, Hopfield said, “the system would find the best route 50% of the time, but it would find one of the two best routes 90% of the time--and it did it in a fraction of the time required by a digital computer.”

Scientists have only recently begun to construct hardware using Hopfield’s designs. Carver Mead of Caltech, for example, constructed a microchip containing the equivalent of 22 neurons, with 484 interconnections.

Big Storage Capacity

“As is the case with many microchips when they are first designed, a few percent of the intersections failed,” Hopfield said. “It didn’t matter, however. The chip worked fine.” In early March, Lawrence Jackel and his colleagues at Bell produced a microchip containing the equivalent of 256 neurons with about 64,000 interconnections. They have just started testing the chip.

“The best thing about the new associative circuits,” Jackel said, “is that they can be made at incredibly high densities. We can . . . store 10 times as much information.”

The associative memory does not have to be constructed from electronic components, however. Demetri Psaltis and his colleagues at Caltech have built an exact analog of Hopfield’s system using only optical components--lasers and holograms. They have found that the system can be used to recall images of faces.

Psaltis thinks that an optically based associative memory could be used for storage of images in a very concentrated form. “It is very difficult, for example, to store and access radar images produced by the space shuttle,” Psaltis said. “A larger version of our system could do this readily.”

Another alternative to the Hopfield model has been developed by Lapedes of Los Alamos. Lapedes’ system removes some restrictions imposed in Hopfield’s model and, in the process, “we get more control,” he said.

Can Set Priorities

In pattern recognition, for example, Hopfield’s model gives equal weight to all features of the pattern. “In identifying bears, the system might have one data point for size, one for color, one for paws and claws, and so forth,” Lapedes said. “But we might be looking at a real bear or a teddy bear.

“But a real bear would be in motion. In our system, we can give a lot more importance to motion, so that we can identify a real bear much more quickly.” No one has yet constructed hardware incorporating Lapedes’ ideas, however.

The major problem with all of these systems at present is that they are merely read-only memories. Once they are constructed, their memories cannot be changed.

“It appears that new memories in the human brain are stored by changing the strength of the connections between neurons,” Hopfield said. “What we need to do is to find a way to change the resistance of the connections in our model so that the system can ‘learn.’ This presents a very serious materials problem.”

Not Insoluble

But not an insoluble one. Psaltis has, in fact, constructed rudimentary systems in which the memories can be changed, he said, “but they are not ready for practical applications.”

Meanwhile, it is possible to simulate that learning experience using a digital computer.

Hopfield and Tank, for example, are attempting to model the learning process of the garden slug. “The slug is one of the simplest biological systems available,” Hopfield said, “since it has only 5,000 to 10,000 neurons,” compared to 10 billion in the human brain.

Even so, the slug can undergo conditioning just like Pavlov’s dogs, which learned to salivate at the sound of a bell. If a slug is fed quinine, which has a bitter taste, before it is given a certain food, the slug will avoid that food in the future. If the quinine is given after the food, however, the slug learns nothing.

Hopfield and Tank have managed to reproduce this behavior. When their electronic slug is, in effect, given a bitter substance before it eats a food, it will no longer eat that food. But when the bitter taste is given afterward, there is no effect.

This result, Hopfield said, indicates that the strong feeling of time sequence that animals and humans possess may originate at the very basic level of the single nerve cell and its interconnections.