When UCI Researchers Duplicated Brain Circuitry in a Program, They Had No Idea That It Would Begin <i> Acting </i> Like a Brain : New Thinking on Computers

- Share via

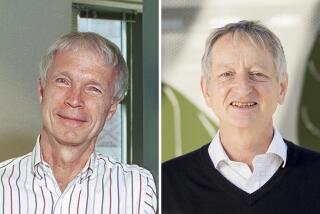

Richard Granger remembers that when he and Gary Lynch, both UC Irvine professors, planned their experiment back in the ‘80s, they agreed not to tell anyone about it.

“This was a ridiculous thing we were doing,” Granger says. “After our first paper was published, people looked at our progress like people look at a street accident--with morbid curiosity.”

Granger, a computer expert, and Lynch, a brain memory researcher, had decided to map the circuits of a small piece of rat brain, simplify them, then duplicate them in a computer program--just to see what happened.

“People have a hard time believing this, but it’s the absolute truth,” Lynch says. “We didn’t ask what it does; we just reproduced it. And we did not know what this thing would do.”

They started feeding the computer signals, simulations of electrical impulses smells create in the brain. Not too surprisingly, the computer stored memory of the smells as the brain does and could recognize them when it “smelled” them again.

But one night as Lynch puttered with the program, the computer started doing something new. Fed a simulated smell, it not only sent back the recognition signal, it sent back a preliminary signal as well.

What was this new signal?

Lynch was puzzled. “I thought we’d screwed up,” Granger recalls. But months later, the answer emerged, and “it was a chilling moment,” Lynch recalled.

The second signal denoted a category, a grouping of similar smells that the computer had devised, all on its own. Without being told to do it, the computer had grouped all flower smells together, all cheese smells together, just as the brain does.

“It had spontaneously reproduced a psychological process,” Lynch says. “It did it because that’s how brain circuits are designed to operate.

“You and a rat and every mammal do it without thinking. You don’t go through life saying, sniff, ‘Oh, there’s that low-fat green-onion Monterey jack.’ You say, sniff, ‘There’s some cheese.’ If you want more details, you go back again and sniff a couple more times.”

Lynch says that once the computer was “wired” like a brain, it acted like a brain. He could not instruct it to record and sort smells. He could only present it with “smells” and let it do what it pleased with them.

“It had to go through a period of development, like an animal. I know this is freaky stuff, but it’s true. It’s all published. It had to learn. “

Once it had memorized enough smells and had sorted them into categories, it was able to do some sophisticated recognition, Lynch says. It could detect smells masked by stronger, different smells. “It would say, ‘That’s roses,’ and then it would say, “and there’s a magnolia and some Cheddar in there, too.’ ”

If the computer had failed, there would have been no way to reprogram it. “If he’s broke, it’s your fault, because you brought him up wrong,” Lynch says. “There’s nothing you can do but erase it all and start all over again, and that’s a fact.”

But all was going well, so Lynch and Granger graduated their computer to more difficult tasks. Ten thousand randomly selected words were fed to it, and it sorted those by textual similarities. Then the words were fed in as sound, and they, too, were categorized, but according to sound similarities.

A human brain has 10 billion brain cells or neurons, but in Lynch and Granger’s computer “there were only 1,000 simulated neurons,” Lynch says. “But it learned to recognize 10,000 words. We actually calculated that if we could build a model with 100,000 neurons, we could have taught that network a new word every five seconds for 50 years and it would still be sucking up the words with no problems--and categorizing them.”

But this computer was behaving brain-like in some other important ways, Lynch says. Typical computers slow down as their tasks become more demanding, but this computer did not. The reason, he says, is that unlike a typical computer, brain circuits do not handle information one step at a time.

A computer goes about its calculations in a most inefficient way. If it’s multiplying 3 times 4, for example, it figures thus: 3 plus 3 equals 6. OK, now add 3 more, that’s 9. Have I done that four times yet? No. OK, add 3 more, that’s 12. Now have I done it four times? Yes. OK, the answer is 12.

But since computers can calculate each step in billionths of a second, the entire calculation seems instantaneous.

Give the typical computer a complicated task, however, and it’s slow as a tax refund. Each part of the task is laid out assembly-line fashion. It can’t do Step Two until Step One is done. If its task is to recognize the face on the TV screen, it scans it bit by tiny bit.

“But the brain’s sensor for light is the eye,” Lynch says. “The eye does not scan the TV screen; it has x-jillion cells that each receive a tiny bit of the light. Each eye cell is attached to a separate neuron, so all this floods simultaneously into the brain, and the brain handles it all at once.”

In computer talk, that’s “parallel processing,” the Holy Grail of computer designers. Lynch and Granger had created a version of it by serendipity.

The bad news? The computer was also like the brain in its fallibility.

In a computer, if A caused B to happen, then A will always cause B to happen, short of a mechanical breakdown. In a brain, if A causes cell B to fire, A probably will cause B to fire next time, too. But maybe not.

Brain circuitry compensates for this fallibility by doing things more than once, forming alternate routes to the same information. And there are so many cells involved in a single memory--a million, perhaps--that if 500 don’t fire, so what?

*

Last year, it finally came time to put the simulated brain to a practical test. Lynch and Granger sent their specifications to the Navy’s Office of Naval Research, which has spent billions of dollars trying to perfect devices for recognizing sonar signals, the underwater sounds that can tell you whether there’s a submarine or a whale nearby.

The results of the Navy’s tests were announced in the summer. When dealing with “difficult classifications, which involved real ocean passive acoustic signals,” Lynch and Granger’s program recognized 95% of the signals and gave no false alarms, the Navy reported. By comparison, “the best artificial net developed by a defense contractor” achieved only 60% and 25%. Besides that, the Navy said, the Lynch-Granger program would work in smaller computers.

Thomas McKenna, the Navy’s program manager for computational neuroscience, says the program from UCI shows “lots of promise. Our group is betting on thinking computers as the future for the Navy,” he says. “We believe they will lead to smart sensors and adaptable robots.”

Joel Davis, another Navy scientific officer, says the Lynch-Granger program follows biological patterns more closely than any previous “neural” program. It’s strongest where traditional programs are weakest: recognizing complex patterns. Besides classifying sonar signals, it might, for example, recognize the vibration patterns of mechanical parts about to fail and give warning, Davis says.

But, he adds, “this field is in its infancy.”

*

Computer chips wired like brain circuitry are being designed and manufactured now, Lynch says. “When we get the hardware for this, we’ll be able to go much larger and much faster. When we get hardware, then things will get very interesting.”

Will this lead to truly intelligent computers? Is HAL, the thinking, feeling computer in the movie “2001,” a real possibility?

Lynch: “When you have these kinds of computer networks emphasizing human-like features, I think we will see psychological processes emerge that will have a resemblance to things like thinking. But they’ll be stuff we weren’t really aware was there in ourselves. Until I told you you classify your memories into categories, you didn’t sense it was happening in you.

“I don’t know of any other way we’re going to penetrate the issue of what it is we really are. I think people are right to say that strange things could happen along the way here. When you start talking about a machine with millions of neurons, some new levels of questions come up about what the hell it is that’s actually sitting there.

“But what approach do we have to really finding out what we are? I’m not saying this is the only path to wisdom. But this is certainly another approach to the question of what it is that we do when we talk about thinking, when we talk about intelligent behavior.”