Silicon Valley’s foundation stone: Moore’s Law turns 50

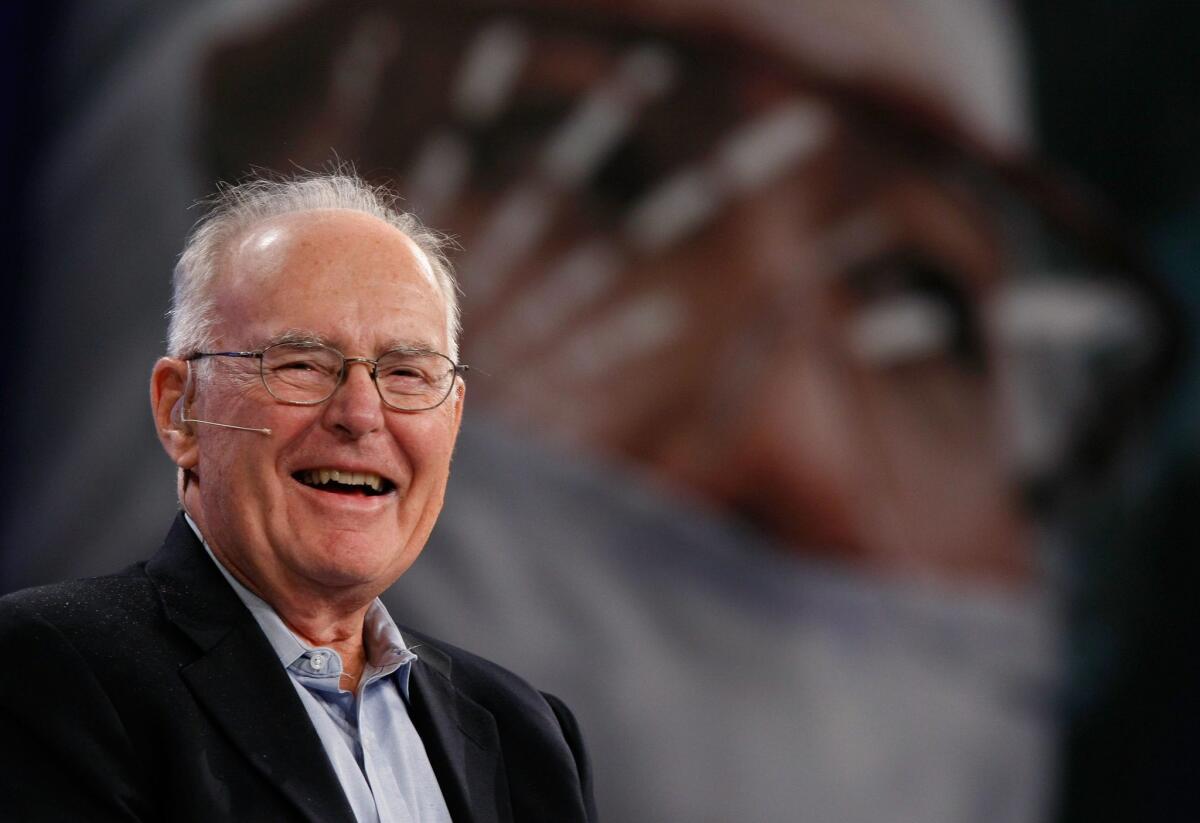

Engineer and visionary: Gordon Moore in 2007.

- Share via

Fifty years ago last Sunday, Electronics Magazine published a very technical paper by a youthful pioneer in the silicon chip business named Gordon Moore.

Inelegantly titled “Cramming more components onto integrated circuits,” the article observed that the number of electronic components then fitting on a chip--at least in the laboratory--was about 60.

Moore was then research director of Fairchild Semiconductor, which had been formed in 1957 by eight engineers who had left William Shockley’s prototypical Silicon Valley startup, Shockley Semiconductor, in discontent over their boss’s manipulative and paranoid behavior. (Shockley called them the “traitorous eight.”)

Moore, who would later co-found Intel, noted in his article that the number of components on a chip had roughly doubled every year since the first silicon oxide transistor in 1959. Moore “blindly extrapolated” that trend 10 years into the future, as he recalled later. By then, he computed, a single chip would hold 65,000 components.

Thus was Moore’s Law born.

Moore’s Law remains one of the most poorly understood yet underappreciated landmarks of the silicon revolution. Moore, now 86, didn’t posit it as a “law” himself--he simply observed the trends of the previous five or six years and projected them forward as far as he considered to be prudent. “I had no idea this was going to be an accurate prediction,” he said later; over the next 10 years there were not 10 doublings but nine, but that “still followed pretty well along the curve.”

The observation was dubbed Moore’s Law a few years after that, by Carver Mead of Caltech. Mead went further: He and Lynn Conway of the Xerox Palo Alto Research Center, or PARC, codified the principles of designing very large-scale integrated circuits, a crucial step forward for the entire industry.

Calling Moore’s observation a “law” had the drawback of suggesting that Moore had derived an immutable trend, prompting people to measure the progress of integrated circuit design against an imagined curve. That’s not Moore’s doing. The most common projection, that complexity would double every 18 months, was a further rough calculation by Dave House, then of Intel.

“I never said 18 months; that’s the way it often gets quoted,” Moore said. Instead, his goal was to show that the standards of design and manufacture in 1965, when integrated circuits were more expensive to assemble than their transistors and other components were separately, wouldn’t last--that very soon, the relationship would turn on its head. Today, a single microprocessor contains billions of microscopic components.

Moore was expressing a world-changing faith in technological progress. “Moore’s Law is an example of a tangible belief in the future--that the future isn’t just a rosy glow,” Mead, who is Caltech’s Gordon and Betty Moore professor of engineering and applied science emeritus, told me in 2005, on the occasion of the article’s 40th anniversary.

“People thought integrated circuits were very expensive, that only the military could afford the technology,” Moore told me on the same occasion. “But I could see that things were beginning to change. This was going to be the way to make inexpensive electronics.”

What might be most unappreciated about Moore’s 1965 article was his perceptiveness about how integrated circuits would become integrated into our daily lives. The chips “will lead to such wonders as home computers--or at least terminals connected to a central computer--automatic controls for automobiles, and personal portable communications equipment.”

Xerox PARC’s pioneering Alto personal computer was still then a hypothesis taking shape in the mind of PARC’s Alan Kay, its physical creation by Kay, Butler Lampson, and Chuck Thacker nearly a decade in the future. Equipping a car with computerized systems was scarcely even science fiction, and the mobile car phones of the era looked like office desk phones, connected to radio transmitters the size of bucket seats.

Almost since the moment when Moore made his initial observation, the world has been speculating about when the trend he spotted would run out of steam. Ten years ago he acknowledged that “one of these days we’re going to have to stop making things smaller.” But so far, every time the industry has neared a presumed physical limit, some technological breakthrough gives it more life.

“I’m periodically amazed at how we’re able to make progress,” Moore said in an Intel video in 2005. “Several times along the way, I thought we reached the end of the line, and our creative engineers come up with ways around them. I can think of at least three to four things that seemed like formidable barriers that we just blew past without any hesitation.” He still had an engineer’s caution about making predictions too far into the future, and a visionary’s assurance that the future would make the present look old.

“As engineers,” he told me then, “we’re always way too optimistic in the short run. But in the long run, things will always evolve much further than we can see.”

Keep up to date with the Economy Hub. Follow @hiltzikm on Twitter, see our Facebook page, or email mhiltzik@latimes.com.

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.