Op-Ed: We’ve unleashed AI. Now we need a treaty to control it

- Share via

Fifty years ago this month, in the midst of the Cold War, nations began signing an international treaty to stop the spread of nuclear weapons. Today, as artificial intelligence and machine learning reshape every aspect of our lives, the world confronts a challenge of similar magnitude and it needs a similar response.

There is a danger in pushing the parallel between nuclear weapons and AI too far. But the greater risk lies in ignoring the consequences of unleashing technologies whose goals are neither predictable nor aligned with our values.

The immediate prelude to the Treaty on Non-Proliferation of Nuclear Weapons was the Cuban missile crisis in 1962. The United States and the Soviet Union went to the brink of nuclear war before reason intervened. A few months later, with that near-catastrophe on his mind, President Kennedy warned that as many as 25 countries could have nuclear weapons by 1975, a sharp rise in the risk of Armageddon.

The non-proliferation treaty, which went into effect in 1970, rested on a central bargain: Nations without nuclear weapons promised never to acquire them and, those with them, agreed to share nuclear technology for peaceful purposes and eventually to disarm.

Advances in AI and machine learning are moving so fast that today seems like yesterday, making the challenge urgent.

Reasonable people can argue about the effectiveness of the treaty — in the intervening years, four more countries acquired nuclear weapons. But the facts are that Kennedy’s dire prediction did not come true, and nuclear war has so far been avoided.

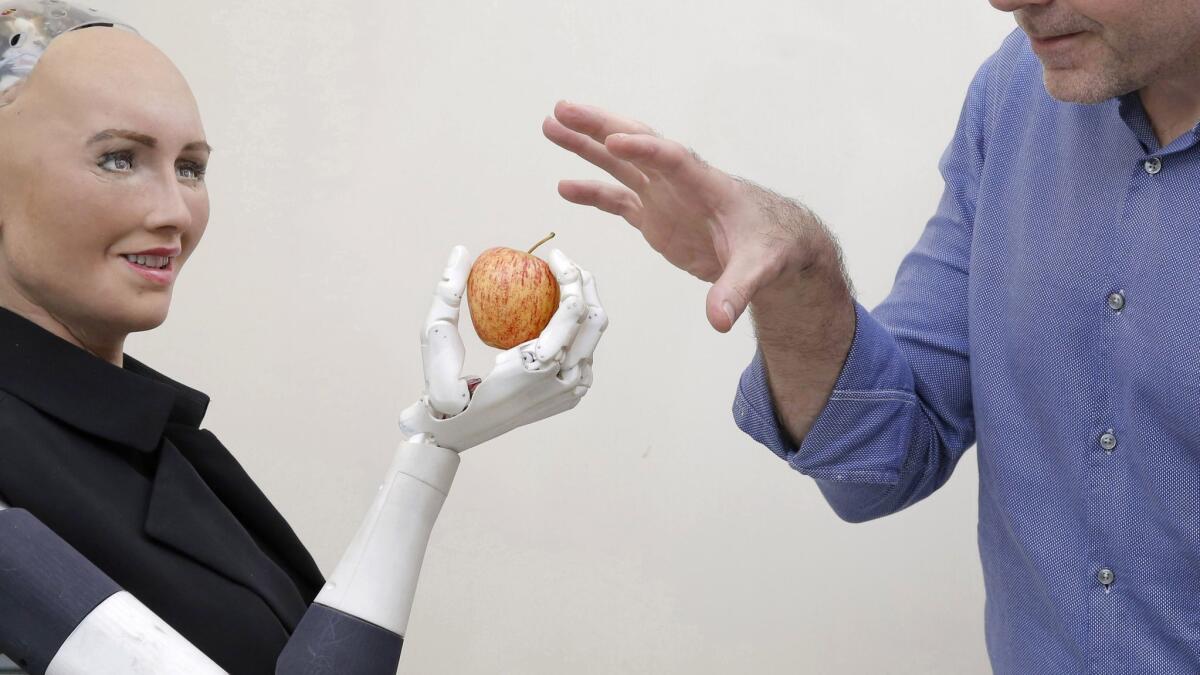

Artificial intelligence has not yet confronted a crisis like the showdown between the USSR and the U.S. in Cuba. By and large, AI has provided us with amazingly beneficial tools. “Learning algorithms” on our digital devices extract patterns from data to influence what we buy, watch and read. On a grander scale, AI helps doctors detect and treat diseases, opens new markets and improves productivity for business, and creates data sets and models that address critical issues related to education, energy and the environment.

At the same time, AI’s perils are apparent. Google won’t renew a U.S. Defense Department contract that involved using artificial intelligence to improve drone targeting and enhance surveillance because 4,000 of its employees objected to the use of their work for lethal purposes.

Similar concerns led Jeff Bezos, the founder of Amazon, to express fear recently about the uses of AI in lethal autonomous weapons. He proposed “a big treaty … something that would help regulate these weapons.”

The danger that autonomous weapons will alter the nature of war and eliminate human control over warmaking is real. So is the risk of accidents involving driverless vehicles and the threat to liberty and critical thinking posed by the misuse of Facebook and search engine files. Other dangers are just as real but less obvious.

First, familiarity breeds complacency. We are seduced by machines that make work and play easier, so we ignore the fact that those same machines increase our vulnerability to threats to civil liberties, democracy and economic equality.

Second, the complexity of AI science may lead policymakers and the public to believe the tech industry is best positioned to decide the future of AI. Yet certainly the issues AI raise should not be left to commercial interests.

Third, the dominance of China and the United States and a few tech giants creates the real prospect of digital feudalism. Concentration of huge amounts of wealth and power in the hands of a few means enormous numbers of people — entire countries and continents — could be left behind.

The ultimate solution to these challenges is a new grand bargain on the scale of the non-proliferation treaty: Nations agree to share the beneficial uses of artificial intelligence and accept universal safeguards to protect against the misuse of these powerful technologies.

Enter the Fray: First takes on the news of the minute from L.A. Times Opinion »

The treaty would enshrine certain basic principles. The concept of “human-in-command” to guarantee that people retain control over AI should be a priority. Standards would be set for monitoring AI systems. Fundamental human rights should be specifically protected. A new international body should be created for oversight, similar to the International Atomic Energy Agency.

The obstacles are apparent, from rogue nations and monopoly-minded companies to the sorry state of international cooperation. But advances in AI and machine learning are moving so fast that today seems like yesterday, making the challenge urgent.

Fortunately, the conversation has begun. Industry groups, think tanks and policymakers are tackling issues like economics, law, security, human rights and environmental protection. The leading industrial economies, through the G-7 and G-20, are examining AI’s effect on growth and productivity. The United Nations is debating a ban on fully autonomous lethal weapons. The Organization for Economic Cooperation and Development and the European Union are weighing principles to guide the future of AI.

It took enormous effort to develop, negotiate and finalize a non-proliferation treaty that is still evolving today. Any attempt to control the power of AI will be just as fraught, bumpy and epic. AI is technology that must be controlled. The world reached consensus in the 1960s and reined in an existential risk. It can be done again.

Douglas Frantz, former Times’ managing editor, was deputy secretary general of the Organization for Economic Cooperation and Development from 2015 to 2017.

Follow the Opinion section on Twitter @latimesopinion and Facebook

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.