- Share via

Engineers at NASA’s Jet Propulsion Laboratory are taking artificial intelligence to the next level — by sending it into space disguised as a robotic snake.

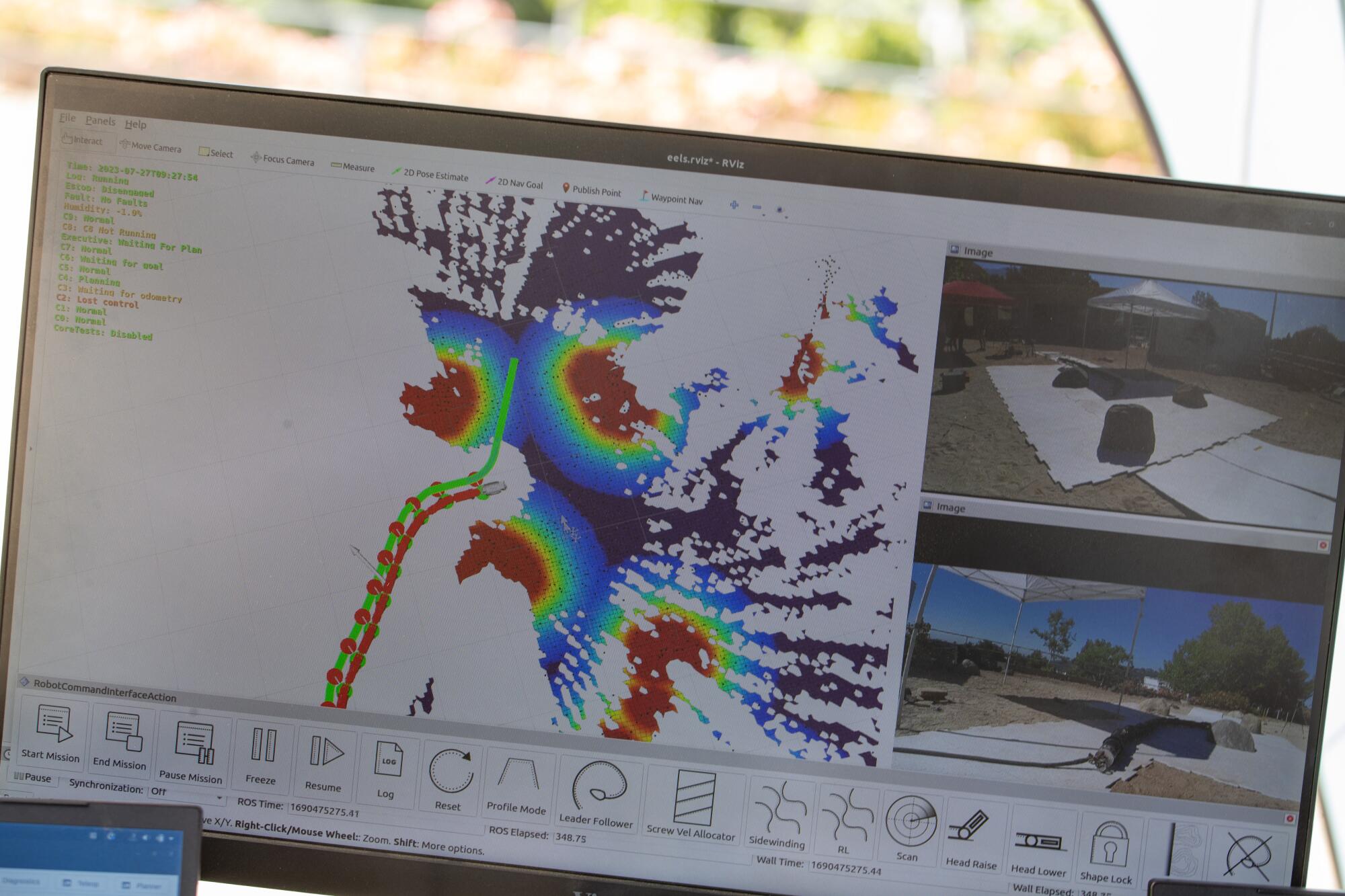

With the sun beating down on JPL’s Mars Yard, the robot lifts its “head” off a glossy surface of faux ice to scan the world around it. It maps its surroundings, analyzes potential obstacles and chooses the safest path through a valley of fake boulders to the destination it has been instructed to reach.

Once it has a plan in place, the 14-foot-long robot lowers its head, engages its 48 motors and slowly slithers forward. Its cautious movements are propelled by the clockwise or counterclockwise turns of the spiral connectors that link its 10 body segments, sending the cyborg in a specific direction. The entire time, sensors all along its body continue to reevaluate the environs, allowing the robot to make adjustments if needed.

JPL engineers have created spacecraft to orbit distant planets and built rovers that rumble around Mars as though they’re commuting to the office. But EELS — short for Exobiology Extant Life Surveyor — is designed to go places that have never been accessible to humans or robots before.

The lava tubes on the moon? EELS could scope out the underground tunnels, which may provide shelter to future astronauts.

The polar ice caps on Mars? EELS would be able to explore them and deploy instruments to collect chemical and structural information about the frozen carbon dioxide.

The liquid ocean beneath the frozen surface of Enceladus? EELS could tunnel its way there and look for evidence that the Saturnian moon might be hospitable to life.

With its subsurface ocean and geysers spewing water and complex organic molecules, scientists say Saturn’s moon Enceladus is one of the most promising places to look for extraterrestrial life in the solar system.

“You’re talking about a snake robot that can do surface traversal on ice, go through holes and swim underwater — one robot that can conquer all three worlds,” Rohan Thakker, a robotics technologist at JPL. “No one has done that before.”

And if things go according to plan, the slithering space explorer developed with grant money from Caltech will do all of these things autonomously, without having to wait for detailed commands from handlers at the NASA lab in La Cañada Flintridge. Though still years away from its first official deployment, EELS is already learning how to hone its decision-making skills so it can navigate even dangerous terrain independently.

Hiro Ono, leader of JPL’s Robotic Surface Mobility Group, started out seven years ago with a different vision for exploring Enceladus and another watery moon in orbit around Jupiter called Europa. He imagined a three-part system consisting of a surface module that generated power and communicated with Earth; a descent module that picked its way through a moon’s icy crust; and an autonomous underwater vehicle that explored the subsurface ocean.

EELS replaces all of that.

Thanks to its serpentine anatomy, this new space explorer can go forward and backward in a straight line, slither like a snake, move its entire body like a windshield wiper, curl itself into a circle, and lift its head and tail. The result is a robot that can’t be stymied by deep craters, icy terrain or small spaces.

“The most interesting science is sometimes in places that are difficult to reach,” said Matt Robinson, the project manager for EELS. Rovers struggle with steep slopes and irregular surfaces. But a snake-like robot would be able to reach places such as an underground lunar cave or the near-vertical wall of a crater, he said.

The best chances of finding extraterrestrial life might lie within the water-rich moons in our own solar system.

The farther away a spacecraft is, the longer it takes for human commands to reach it. The rovers on Mars are remote-controlled by humans at JPL, and depending on the relative positions of Earth and Mars, it can take five to 20 minutes for messages to travel between them.

Enceladus, on the other hand, can be anywhere from 746 million to more than 1 billion miles from Earth. A radio transmission from way out there would take at least an hour to arrive, and perhaps as long as an hour and a half. If EELS found itself in jeopardy and needed human help to get out of it, its fate might be sealed by the time its SOS received a reply.

“Most people get frustrated when their video game has a few-second lag,” Robinson said. “Imagine controlling a spacecraft that’s in a dangerous area and has a 50-minute lag.”

That’s why EELS is learning how to make its own choices about getting from Point A to Point B.

Teaching the robot how to assess its environment and make decisions quickly is a multi-step process.

First, EELS is taught to be safe. With the help of software that calculates the probability of failures — such as crashing into something or getting stuck — EELS is learning to identify potentially dangerous situations. For instance, it is figuring out that when something like fog interferes with its ability to map the world around it, it should respond by proceeding more cautiously, said Thakker, the autonomy lead for the project.

It also relies on its array of built-in sensors. Some can detect a change in its orientation with respect to gravity — the robot equivalent of feeling as though you’re falling. Others measure the stability of the ground and can tell whether hard ice suddenly turns into loose snow, so that EELS can maneuver itself to a more navigable surface, Thakker said.

Scientists and California state officials are working together to study Earth’s rapidly changing systems and make more informed decisions.

In addition, EELS is able to incorporate past experiences into its decision-making process — in other words, it learns. But it does so a little differently than a typical robot powered by artificial intelligence.

For example, if an AI robot were to spot a puddle of water, it may investigate a bit before jumping in. The next time it encounters a puddle, it would recognize it, remember that it was safe and jump in.

But that could be deadly in a dynamic environment. Thanks to EELS’ extra programming, it would know to evaluate the puddle every single time— just because it was safe once doesn’t guarantee it will be safe again.

In the Mars Yard, a half-acre rocky sandbox used to test rovers, Thakker and the team assign EELS a specific destination. Then it’s up to the robot to use its sensors to scan the world around it and plot the best path forward, whether it’s directly in the dirt or on white mats made to mimic ice.

It’s similar to the navigation of a self-driving car, except there are no stop signs or speed limits to help EELS develop its strategy, Thakker said.

EELS has also been tested on an ice rink, on a glacier and in snow. With its spiral treads for traction and multiple body segments for flexibility, it can wiggle itself out of all sorts of tricky terrain.

The robot isn’t the only one learning. As its human handlers monitor EELS’ progress, they adjust its software to help it make better assessments of its surroundings, Robinson said.

“It’s not like an equation you can just solve,” Ono said. “It can often be more of an art than science. ... A lot comes from experience.”

The goal is for EELS to gain enough experience to be sent out on its own in any kind of setting.

“We aren’t there yet,” Ono said. But EELS’ recent advances amount to “one small step for the robot and one large step for mankind.”