Sam Altman to be reinstated as head of OpenAI just days after his firing

- Share via

Sam Altman, the ousted leader of ChatGPT-maker OpenAI, is returning to the company that fired him late last week, culminating a days-long power struggle that shocked the tech industry and brought attention to the conflicts around how to safely build artificial intelligence.

Altman will answer to a different board of directors from the one that fired him Friday. The San Francisco-based company said late Tuesday that it had “reached an agreement in principle for Sam Altman to return to OpenAI as CEO with a new initial board.”

It will be led by former Salesforce co-CEO Bret Taylor, who also chaired Twitter’s board before its takeover by Elon Musk last year. The other members will be former U.S. Treasury Secretary Larry Summers and Quora CEO Adam D’Angelo.

OpenAI’s previous board of directors, which included D’Angelo, had refused to give specific reasons for why it fired Altman on Friday, leading to a weekend of internal conflict at the company and growing outside pressure from the startup’s investors.

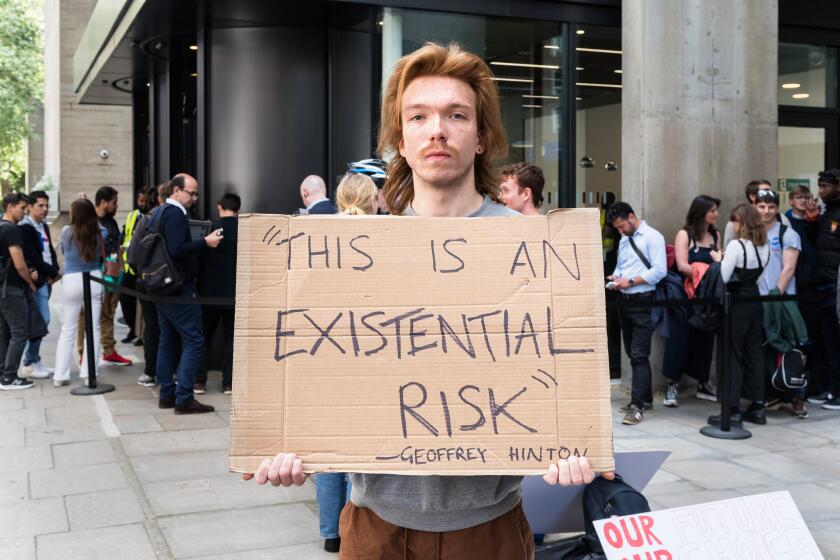

The chaos also accentuated the differences between Altman — who’s become the face of generative AI’s rapid commercialization since ChatGPT’s arrival a year ago — and members of the company’s board who have expressed deep reservations about the safety risks posed by AI as it gets more advanced.

“The OpenAI episode shows how fragile the AI ecosystem is right now, including addressing AI’s risks,” said Johann Laux, an expert at the Oxford Internet Institute focusing on human oversight of artificial intelligence.

The hottest startup in Silicon Valley is in flames. So is the fiction that anything other than the profit motive is going to govern how AI gets developed and deployed.

Microsoft, which has invested billions of dollars in OpenAI and has rights to its technology, quickly moved to hire Altman on Monday, as well as another co-founder and former president of the company, Greg Brockman, who had quit in protest after Altman’s removal. That emboldened nearly all of the startup’s 770 employees to threaten a mass exodus and to sign a letter calling for the board’s resignation and Altman’s return.

One of the four board members who participated in Altman’s ouster, OpenAI co-founder and chief scientist Ilya Sutskever, later expressed regret and joined the call for the board’s resignation.

Microsoft in recent days had pledged to welcome all employees who wanted to follow Altman and Brockman to a new AI research unit at the software giant. Microsoft CEO Satya Nadella also made clear in a series of interviews Monday that he was still open to the possibility of Altman returning to OpenAI, so long as the startup’s governance problems were solved.

“We are encouraged by the changes to the OpenAI board,” Nadella posted on X late Tuesday. “We believe this is a first essential step on a path to more stable, well-informed, and effective governance.”

Microsoft plans to bring Bing search to ChatGPT. The software giant also plans to watermark the AI content it generates, so users know it’s not human-made.

In his own post, Altman said that “with the new board and Satya’s support, I’m looking forward to returning to OpenAI, and building on our strong partnership with [Microsoft].”

The leadership drama offers a glimpse into how big tech companies are taking the lead in governing AI and its risks while governments scramble to catch up. The European Union is working to finalize what’s expected to be the world’s first comprehensive AI rules.

In the absence of regulations, “companies decide how a technology is rolled out,” Laux said.

That might be acceptable if you believe that the risks don’t demand government involvement, but “do we believe that for AI?” he said.

President Biden signed a sweeping executive order to guide the development of artificial intelligence, seeking to contain its perils and maximize its possibilities.

Co-founded by Altman as a nonprofit with a mission to safely build so-called artificial general intelligence that’s smarter than humans and benefits humanity, OpenAI later became a for-profit business, but as of earlier Tuesday, it was still run by its nonprofit board of directors. It’s not clear yet if the board’s structure will change with its newly appointed members.

“We are collaborating to figure out the details,” OpenAI posted on X. “Thank you so much for your patience through this.”

Nadella said Brockman, who was OpenAI’s board chairman until Altman’s firing, will also have a key role to play in ensuring that OpenAI “continues to thrive and build on its mission.”

Hours earlier, Brockman returned to social media as if it were business as usual, touting a feature called ChatGPT Voice that was rolling out for free to everyone who uses the chatbot.

High-profile authors such as Douglas Preston, George R.R. Martin and Michael Connelly are suing tech companies, saying that generative artificial intelligence software is ripping off their copyrighted work. But the legal issues may be complicated.

“Give it a try — totally changes the ChatGPT experience,” Brockman wrote, flagging a post from OpenAI’s main X account that featured a demonstration of the technology playfully winking at recent turmoil.

“It’s been a long night for the team and we’re hungry. How many 16-inch pizzas should I order for 778 people,” the person asks, using the number of people who work at OpenAI. ChatGPT’s synthetic voice responded by recommending around 195 pizzas, ensuring that everyone would get three slices.

As for OpenAI’s short-lived interim CEO Emmett Shear, the second interim CEO in the days since Altman’s ouster, he posted on X that he was “deeply pleased by this result” after about 72 “very intense hours of work.”

“Coming into OpenAI, I wasn’t sure what the right path would be,” Shear wrote. “This was the pathway that maximized safety alongside doing right by all stakeholders involved. I’m glad to have been a part of the solution.”

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.