An algorithm to decide early release of inmates: What could go wrong?

- Share via

Computer algorithms drive online dating sites that promise to hook you up with a compatible mate. They help retailers suggest that, because you liked this book or that movie, you’ll probably be into this music. So it was probably inevitable that programs based on predictive algorithms would be sold to law enforcement agencies on the pitch that they’ll make society safe.

The Los Angeles Police Department feeds crime data into PredPol, which then spits out a report predicting -- reportedly with impressive accuracy -- where “property crimes specifically, burglaries and car break-ins and thefts are statistically more likely to happen.” The idea is, if cops spend more time in these high-crime spots, they can stop crime before it happens.

Chicago police used predictive algorithms designed by an Illinois Institute of Technology engineer to create a 400-suspect “heat list” of “people in the city of Chicago supposedly most likely to be involved in violent crime.” Surprisingly, these Chicagoans -- who receive personal visits from high-ranking cops telling them that they’re being watched -- have never committed a violent crime themselves. But their friends have, and that can be enough.

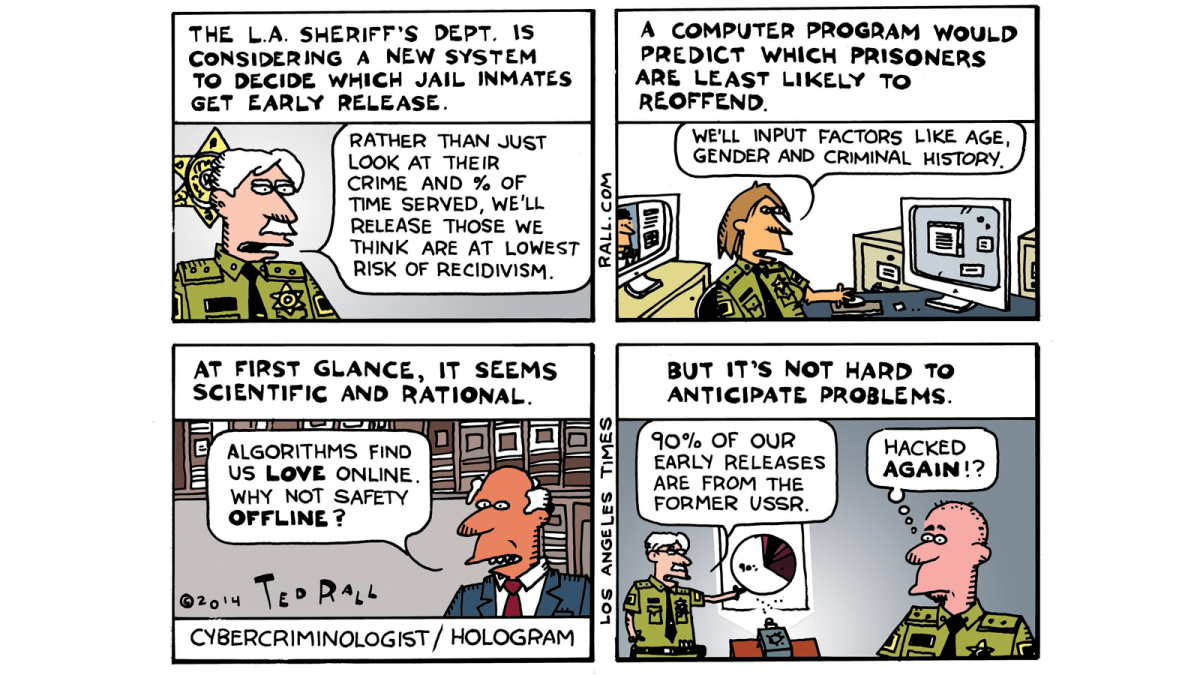

PHOTO GALLERY: Ted Rall cartoons

In other words, today’s not-so-bad guys may be tomorrow’s worst guys ever.

But math can also be used to guess which among yesterday’s bad guys are least likely to reoffend. Never mind what they did in the past. What will they do from now on? California prison officials, under constant pressure to reduce overcrowding, want to limit early releases to the inmates most likely to walk the straight and narrow.

Toward that end, Abby Sewell and Jack Leonard report that the L.A. County Sheriff’s Department is considering changing its current evaluation system for early releases of inmates to one based on algorithms:

Supporters argue the change would help select inmates for early release who are less likely to commit new crimes. But it might also raise some eyebrows. An older offender convicted of a single serious crime, such as child molestation, might be labeled lower-risk than a younger inmate with numerous property and drug convictions.

The Sheriff’s Department is planning to present a proposal for a “risk-based” release system to the Board of Supervisors.

“That’s the smart way to do it,” Interim Sheriff John L. Scott said. “I think the percentage [system, which currently determines when inmates get released by looking at the seriousness of their most recent offense and the percentage of their sentence they have already served] leaves a lot to be desired.”

Washington state uses a system similar to the Sheriff Department’s proposed one, and it has had a 70% accuracy rate. “A follow-up study … found that about 47% of inmates in the highest-risk group returned to prison within three years, while 10% of those labeled low-risk did.”

No one knows which ex-cons will reoffend. No matter how we decide which prisoners walk free before the end of their sentences, whether it’s a judgment call rendered by corrections officials or one generated by algorithms, it comes down to human beings guessing what other human beings do. Behind every high-tech solution, after all, are programmers and analysts who are all too human. Even if that 70% accuracy rate improves, some prisoners who have been rehabilitated and ought to have been released will languish behind bars while others, dangerous despite best guesses, will go out to kill, maim and rob.

If the Sheriff’s Department moves forward with predictive algorithmic analysis, they’ll be exchanging one set of problems for another.

Technology is morally neutral. It’s what we do with it that makes a difference.

That, and how many Russian hackers manage to game the system.

ALSO:

If drivers don’t like cyclists, they’re going to hate e-skateboarders

Sheryl Sandberg’s ridiculous ‘Ban Bossy’ idea: Women like to be bossy

Scared Russia will become an unchecked superpower? Curb climate change

Ted Rall, who draws a weekly editorial cartoon cartoonist for The Times, is also a nationally syndicated opinion columnist and author. His new book is “Silk Road to Ruin: Why Central Asia is the New Middle East.” Follow Ted Rall on Twitter @TedRall.

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.